Plus, I've always wanted to ship an application via Homebrew. There's also product features I could add to round out the tool (right now, grup is effectively a technical demo) like common flags, syntax highlighting, ignoring hidden/binary files. Also, faster output with a buffer - currently each line match is a print call! I need to profile more to figure out the most impactful optimizations but there are a few obvious ones like parallel directory traversal à la ripgrep. However, the brute force multithreaded approach of grup and sift means that grep falls behind. If we look at the user time in the regex benchmark results, we can see that grep is much more efficient than grup and sift. (It's also worthwhile if you have a fast regex engine!) So you can actually work-around a slow regex engine.

#RIPGREP GUI FULL#

And then only run the full regex engine on those lines. It should get the 'Facebook' literal and look for lines containing that. Yeah, Go's regex engine really hurts you there!Īlso, GNU grep should do well here too. More thoughts by Gallant on these results: ripgrep is built on top of Rust's regex engine which uses finite automata, SIMD, and aggressive literal optimizations. This specific benchmark stresses the regex implementation more than anything else. A recursive fixed string search with an output that displays the line count for the text file match but not the binary file match (standard grep behaviour). Here's something I commonly do: search 99k files (1.3GB), in 11k directories, for two matches. gitignore, it also avoids hidden files and binary files. By default, it ignores the same files as your. This is one of the key wins of ripgrep - and how it fits into the standard development workflow so well.

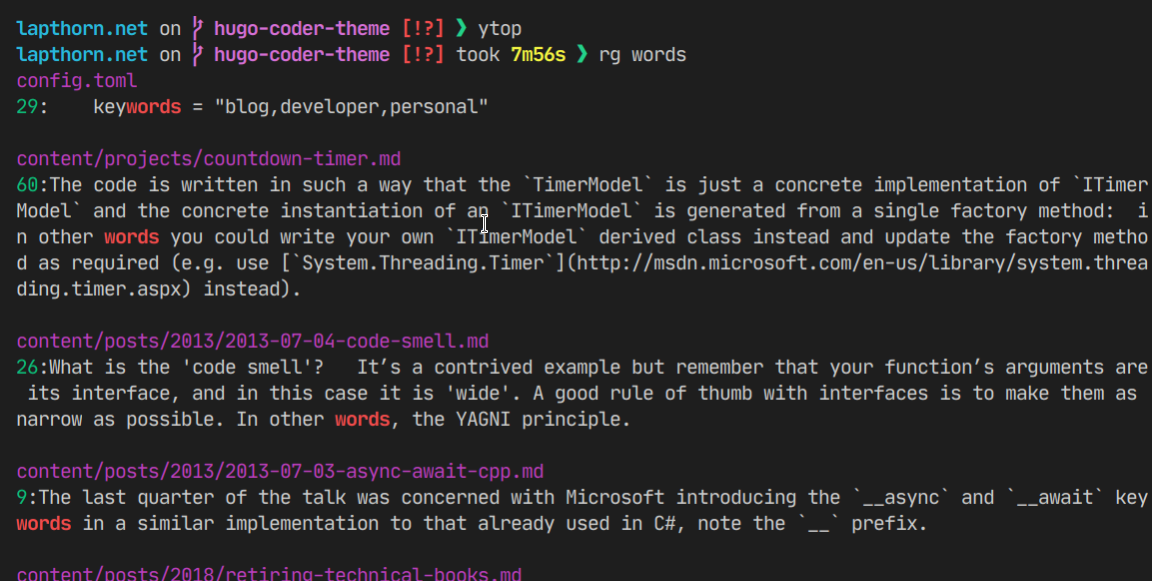

In practice, you probably want to skip binary files. There are different ways to tell if a file is binary but the one I used is: does NUL appear in the first buffer? This means we can do the same and stop reading binary files when we hit a match. Grep doesn't report line numbers from binary files by default. To support regular expressions, a Regexp object is compiled once. I've changed the types to be byte instead of string to make less allocations but aside from that it's copy and pasted. Specifically, I use the internal implementation from Go's standard library. Instead, I use the Boyer–Moore string-search algorithm. Grep is single threaded, my version is multithreaded, end of article, etc.Ī naïve string search will look at every single character. These search workers zoom over my SSD gobbling up bytes. The other optimizations here almost don't register in comparison. Go routines play very nicely with blocking system calls and add very little overhead. Instead I hand-wrote a traversal function that passes search jobs to waiting search workers over a channel.Ī large number of search workers in Go routines is the real performance win over grep here. In my personal use-case of grep, I don't need deterministic output. The recommended directory traversal function in Go is filepath.WalkDir but this walks files in lexical order by sorting them. Traverse directories, search files in parallel, and try not to look at every character. The tool I built for this post is called grup and lives inside my terminal tools monorepo which is a project to take ownership over the commands I run every day.

I chose Go because I like writing Go programs. I set out to beat macOS Monterey's default grep (2.6.0-FreeBSD) in a microbenchmark that represents my daily file searching.

String searching is an old problem in computer science, but there is still plenty of work left to do to advance the state of the art. Gallant's treatise on file searching, and the benchmarks and analysis, got me excited about searching, and the conclusion of his post rings true: These modern Unix tools (like ripgrep, bat, jq, exa, or fd) aren't quite drop-in replacements but they're close enough to avoid paper cuts, and for most use cases are better than the originals in a programmer's daily workflow. Andrew Gallant's ripgrep introduction post showed us that classic Unix tools like grep (and its later iterations like ag) can be dramatically improved in the areas of raw performance, user-experience, and correctness.

0 kommentar(er)

0 kommentar(er)